How To: Securing AKS (Kubenet) Egress with Aviatrix Firenet

How To:

Securing AKS (Kubenet) Egress with Aviatrix Firenet

AKS, or Azure Kubernetes Service, is Microsoft's variation on managed Kubernetes, similar to EKS (AWS) or GKE (Google). AKS has two CNIs for deployment - Kubenet and Azure CNI. This article will focus on integrating AKS Kubenet with Aviatrix.

Aviatrix is the leading vendor of Multicloud connectivity solutions.

"The Aviatrix cloud network platform delivers a single, common platform for multi-cloud networking, regardless of public cloud providers used. Aviatrix delivers the simplicity and automation enterprises expect in the cloud with the operational visibility and control they require."

Firenet on Azure enables Firewall insertion as a bump in the wire.

Prerequisites

Deploying Firenet on Azure is beyond the scope of this article. I'll assume that you have a working Transit Firenet deployment.

Diagram - To be added

Quick AKS CNI overview

- Kubenet is Azure's basic networking CNI.

- The only routable IPs are the node IPs; pods and services are not routable outside of the AKS cluster.

- Pod to outside world traffic is source NAT'd to the IP of the node that runs the pod.

- Calico network policy is supported, Azure network policy is not.

- Azure CNI

- Pod and node IPs share the same subnet and routable.

- Calico and Azure Network policy are supported.

- Very heavy on IP usage, could use Aviatrix SNAT to allow overlapping IPs.

- Azure CNI (preview)

- Pod and node IPs are on the same VNET but different subnets.

- Calico and Azure Network policy are supported.

- Pod traffic outside of the VNET uses the Node IP. Inside of the VNET uses the Pod IP.

- Aviatrix could take advantage of the subnet separation and built in ip-masq-agent functionality through custom spoke advertisements.

Terraform Deployment

The Portal and Powershell/CLI provide some very automated methods of deploying AKS. These methods do not always allow for deployment customizations that most will want to use.

Since this is repeatable, multistep process, I opted to use Terraform. Please see the Terraform here.

Azure pre-AKS deployments

- Resource Group

- User Assigned Identity

- AKS will not deploy to a user defined subnet, route table, or Private DNS zone with the Managed Identity.

- A Service Principal will also work here.

- Role Assignment

- The UAI needs to be assigned a role and scope. For simplicity, I granted contributor access to the AKS resource group.

- VNET and AKS Subnet

- Remember that in the Kubenet model, the AKS subnet needs to be big enough to hold the nodes.

- Route Table associated to the AKS Subnet

- Both AKS and Aviatrix will update this route table.

- After AKS is deployed, a null default route is added. This tells Aviatrix that the AKS subnet is private and will need Transit Firenet egress.

AKS deployment

- Specified a private cluster - this feature uses Private Link to expose the K8S admin endpoint.

- Automatic Channel Upgrade was specified as stable.

- I believe this is a bug in Terraform azurerm 2.79.0 as I did not have to specify this before and the setting defaulted to none.

- The subnet defined in the pre-deployment step is used.

- CIDRs are defined for the internal K8S resources - services, pods, docker bridge, DNS

- The outbound type must be userDefinedRouting to use Firenet.

Connecting to Aviatrix

- Spoke Gateway

- Enabled High Performance Encryption

- Enabled High Availability

- Specified a /25 within the VNET address space for creation of the Spoke Gateway subnet.

- Connected to a pre-existing Transit Firenet gateway.

Verification

After the Terraform completes, there are a couple items to check.

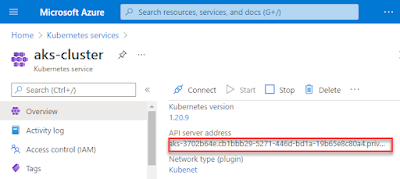

- Cluster - make sure there the AKS Cluster is deployed. Make a note of the Private Link DNS name. This name is also included with the Terraform output.

- Private Link IP

Click the Network Interface for the private endpoint.

- Route Table - there should be both Aviatrix and AKS routes in the table created.

Please note that 10.64.1.x are AKS node IPs. 10.64.0.4 is the IP address of the primary Aviatrix Transit Gateway.

Testing

There are several docker images that contain network diagnostic tools. I opted for this one as it contains sufficient tools to prove out the network.

- Add the Cluster API Server Address FQDN and Private Link IP to your hosts file.

- Log into the AKS cluster you just created.

- Deploy your test image and run your tests.The ifconfig.me curl test and the test pod deployment shows that traffic is effectively routed via the centralized Aviatrix Transit Firenet.

I ran a curl of ifconfig.me which will return the Public IP. This Public IP matches one of my Firewall instances in Google Cloud. Further, I see traffic from the node IPs in the firewall logs.

I ran a curl of ifconfig.me which will return the Public IP. This Public IP matches one of my Firewall instances in Google Cloud. Further, I see traffic from the node IPs in the firewall logs.

Conclusion

AKS and Aviatrix Transit Firenet are easy to integrate properly in a consistent and automated fashion. Obviously, Kubernetes is a very large topic and there still is much to do.

Next up is to automate Ingress to a web app on the Cluster and enable NGFW inspection of the traffic.

Visit the Aviatrix website and get a sales call together. Let's see how easy we can make your Cloud networking journey.

Comments

Post a Comment