Solved with Aviatrix: Securely bring Google-managed Apigee-X to another Cloud or Datacenter

How To:

Securely bring Google-managed Apigee-X to another Cloud or Datacenter

Product Focus

Google Apigee is one of the most popular tools to expose APIs to the wider world.

From the Apigee documentation: Apigee is a platform for developing and managing APIs. By fronting services with a proxy layer, Apigee provides an abstraction or facade for your backend service APIs and provides security, rate limiting, quotas, analytics, and more.

This blog is focused on Apigee-X, which is hosted in Google Cloud.

Aviatrix is the leading vendor of Secure Multicloud Networking.

From the website: The Aviatrix cloud network platform delivers a single, common platform for multi-cloud networking, regardless of public cloud providers used. Aviatrix delivers the simplicity and automation enterprises expect in the cloud with the operational visibility and control they require.

Aviatrix can easily connect Google Apigee-X to AWS, Azure, and on-prem backends with full encryption and visibility.

Background

Our customer has standardized on AWS and Google Clouds. The customer had decided upon Aviatrix to provide Transit, Visibility, and Security for AWS. Using Aviatrix to provide the a consistent Google Cloud architecture was an easy decision.

Our customer has a large number of systems that exist not only in Google Cloud but also in AWS and in legacy datacenters. Typical API usage is from 3rd party vendor connections, mobile apps, and web pages. Access can be from anywhere.

These APIs are mission critical and revenue generating. Outages are unacceptable. To protect the APIs, Apigee-X was chosen to expose these systems. Apigee-X is deployed in multiple regions to protect availability.

Apigee-X Design

Google Apigee-X is a hybrid service; resource ownership is split between Google and the customer. Private Services Access is used to facilitate the resource relationship. The Service Producer VPC is allocated a CIDR by the customer when deploying Agigee-X. This VPC is peered to a Customer-owned VPC.

See Step 4: Configure service networking in the Apigee-X provisioning guide for more details.

The below image represents the Apigee-X Network flows. The original image is from the Architecture overview.

- Flow 1: Ingress flows to the Apigee-X Frontend in the peered Service VPC. These are generally from the Internet but could be Internal.

- Google's reference design uses their Global External HTTPS Load Balancer.

- The Load Balancer's backends are regional Managed Instance Groups running Linux/IPTables.

- The Backends must be in the same Global VPC.

- The MIGs NAT traffic to the real Apigee-X endpoint in the peered Service VPC.

- Flow 2: Flows south to backend API resources via Private Connectivity. Google's diagram places the backend in the VPC with the Ingress but it is (obviously?) oversimplified.

- Flow 3: Flows south to backend API resources over Public Internet. Our customer has a strict policy against this kind of traffic, so it will be not be used.

Apigee-X Design Challenges

The above reference is fine if the network is simple. However, most companies will want multi-region support, firewall insertion, and visibility. Their endpoints will be spread across multiple networks in Cloud or on-prem. Backend Public IP connectivity needs to be reduced or eliminated.

What if we could simply merge the above simplicity with a larger network?

Challenge: Global VPC regional routing

Regional routing affinity in the VPC routing table can be difficult, especially when coupled with VPC peering.

- Dynamic (BGP) Routes are required to route regionally with effective failover.

- There is no way to specify regionality in a Static Route. You can't specify a route as preferred for US Central and another as preferred for EU West.

- Routes and VMs can be Network Tagged to simulate regional routing, but failover to other regions is a manual process. Tagged Routes do not cross the VPC peering and cannot be used by the Apigee-X Runtime.

- Google's Firewall Insertion reference runs into the same issue, as it specifies Static Routes to Network Load Balancers to direct traffic.

There are native ways to workaround this issue, such as using VPN or Cloud Interconnect, but those introduce their own caveats.

Aviatrix Solution

The customer can use their existing Aviatrix deployment in AWS and simply add on the Google reference design. The solution enables regional routing, as well as the other Aviatrix advantages such as visibility, security, and easy Firewall Insertion.

Design Components

- Global Load Balancer: Sends client traffic to MIGs from Clients.

- Global VPCs:

- apigee-service-vpc: This is Google's VPC in their diagram. This VPC is peered to the apigee-ingress-global VPC/Customer Global VPC.

- apigee-ingress-global: This is the Customer Global VPC equivalent in Google's architecture diagram above. It contains:

- MIG for IPTables (NAT on Flow #1)

- NCC/Cloud Router and Aviatrix Transit Gateway NIC as Router Appliance Spoke

- For each Google Cloud Region:

- transit: Regional Aviatrix Transit VPC with an HA Pair of Gateways. Each Gateway has a NIC in the apigee-ingress-global VPC, as mentioned above.

- transit-fw-exch: VPC for Aviatrix Transit to forward to the firewall.

- cp-external: Ingress/Egress Public VPC for firewall

- AWS us-east 1:

- Transit Firenet example with Spokes

- Connected to Google Transit using High Performance Encryption

Aviatrix Transit and NCC/Cloud Router (Dynamic BGP Routing)

This solution solves the Global VPC routing challenge. NCC is used to install Aviatrix routes into the apigee-ingress-global VPC routing table with regional affinity. These dynamic (BGP) routes are carried into the apigee-service-vpc using VPC peering and export custom routes.

Specific features:

Flows

- Flow 1: This is the standard GCP architecture, aside from Akamai.

- The Customer reaches to Akamai as front end by Public Internet.

- Akamai proxies to the Global Load Balancer, also by Public Internet.

- The XLB directs traffic to the closest MIG using the Google Network.

- The MIG instance forwards traffic across the peering to the Apigee-X regional Load Balancer.

- Flow 2: An Apigee-X regional instance reaches out to a backend API.

- The Dynamic (BGP) routes received across the VPC peering direct the SYN to the Aviatrix Transit Gateway NIC in the appropriate region.

- As required, the Transit Gateway hairpins the SYN to the regional Firewall for flow inspection.

- If allowed, the Transit Gateway sends the SYN to the next hop. This could be:

- A Spoke Gateway attached to this Transit Gateway (not shown).

- Another Transit Gateway in GCP.

- Another Transit Gateway in AWS/Azure.

- A on-prem datacenter via IPSec or Cloud Interconnect (not shown).

- The SYN is eventually forwarded to the destination VPC Spoke or to the on-prem datacenter and on to the API backend.

- The backend will respond with a SYN..ACK and eventually the flow will be established.

Implementation

Note: Deployment of Apigee-X and the MIGs is beyond the scope of this document.

Prerequisites

- Apigee-X deployment CIDR: 10.10.0.0/21

- Existing Aviatrix Controller/deployment in any Cloud

- GCP Credentials for the Project onboarded.

- IP ranges for the subnets. I prefer to do aggregate blocks per Cloud and per region. Ex:

- us-west1: 10.3.0.0/16

- us-central1: 10.1.0.0/16

- us-east1: 10.2.0.0/16

- 4 private, unique ASNs: One each per Aviatrix Transit Gateway and the Google Cloud Routers.

- us-west1: 64851

- us-central1: 64853

- us-east1: 64855

- All Cloud Routers: 64852

- Export Custom Routes is enabled on the apigee-ingress-global <-> apigee-service-vpc peering.

Subnets Deployed

Aviatrix Transit Gateways

Log into the Aviatrix Controller and click Multicloud Transit >> Setup.Fill out the form for Step 1, similar to the below.

- High Performance Encryption/Insane Mode is optional in this example.

- Be sure to pick a Gateway size that will handle 3+ NICs.

- The BGP over LAN subnet must be the regional subnet deployed in the apigee-ingress VPC.

- Important: Repeat this task 3 times for the 3 regions. Double check subnet locations.

Aviatrix Transit Gateways, HA

Once the Transits are built, we can then deploy the HA Gateway.

- For the Backup Gateway zone, pick a different zone.

- The BGP over LAN subnet must be the regional subnet deployed in the apigee-ingress VPC.

- Important: Repeat this task 3 times for the 3 regions. Double check subnet locations.

Gateway Configuration

Go to Multi-Cloud Transit >> Advanced.

Transit Peerings

These peering enable failover routing. Ideally, we should maintain regional affinity but different routing patterns should emerge if there is a zonal or regional failure.

Go to the Multi-cloud Transit Peering workflow. The link is two below Setup, from the Transit Gateway deploys.

- transit-uswest to transit-uscentral

- transit-uscentral to transit-useast

- transit-useast to transit-uswest

Aviatrix/NCC Integration

Up to this point, we haven't done any serious integration with the Apigee-X deployment's networking. We have NICs deployed in the apigee-ingress-global VPC that now need to be configured.

Aviatrix BGP over LAN

Go to the Multi-Cloud Transit >> Setup, then choose External Connection.

Choose External Device, BGP, and LAN to populate the full step.

- The above information will be used with NCC/Cloud Router configuration.

- The Purple box is the Aviatrix Transit Gateway's unique ASN.

- The Orange boxes represent the NCC/Cloud Routers' ASN.

- The Red boxes represent the NCC/Cloud Router's two peer IPs.

- These IPs are arbitrary but must be unique in the Transit Gateway BGP subnet.

- For the uswest gateway, the subnet CIDR is 10.3.1.0/28.

- Repeat this task 3 times for the 3 regions, updating the ASNs/IPs as appropriate.

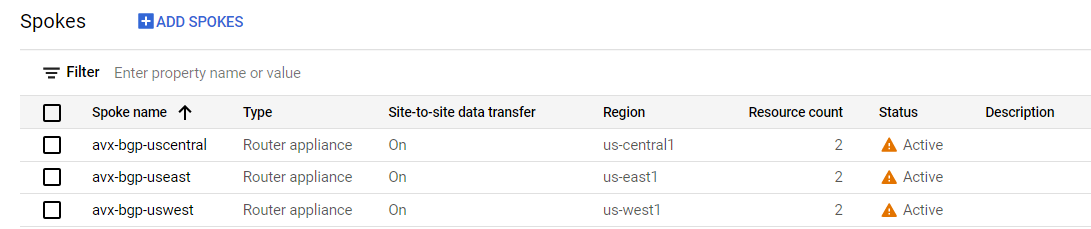

Google NCC/Cloud Router: Build Spokes

Under the Hybrid Connectivity section in the Google web console, go to Network Connectivity Center. Create a new Hub.

(Click to Enlarge)

The red boxes show the configuration needed.

- Make sure to pick the correct region and VPC. Otherwise the association is created with wrong NICs.

- Make sure Site-to-Site data transfer is on.

Click Add Spoke to add the additional 2 regions. Then click Create. Once deployed, we can see the Spokes displayed in the GUI.

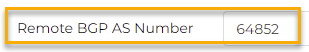

Google NCC/Cloud Router: Configure BGP

Click one of the Spoke names in the view above then expand the sections for each Aviatrix Gateway.

(Click to Enlarge)

- Keep advertising existing visible routes.

- Add a custom route for the Service VPC CIDR. In this example, it is 10.10.0.0/21.

Click Create and Continue.

Note that the Peer ASN is the same as the Aviatrix Transit in that region.

The Cloud Router BGP IP is the same as specified for the Remote LAN IP.

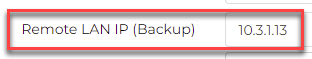

(Click to Enlarge)

Everything is the same, except the Cloud Router BGP IP matches the Remote LAN IP (Backup) field.

Click Save and Continue.

Now choose Configure BGP Session for the HA Gateway.

(Click to Enlarge)

Select the existing Cloud Router from the Primary gateway then Select & Continue. Edit the BGP session for line 1.

(Click to Enlarge)

Since the Cloud Router is already configured, all we have to configure is a name and ASN. This is the same ASN for Aviatrix Transit that we configured before.

Click Save and Continue and then finish the other session with as done previously.

(Click to Enlarge)

This Spoke is now configured. Configure the BGP Peerings for the other spokes in the same way, keeping in mind the ASNs and IPs will change.

Once we confirm BGP is up and routes are exchanged, we can call the implementation phase as good.

Testing

Verification - does the Service VPC CIDR make it where it should?

In the Aviatrix Controller, go to the Multi-Cloud Network >> List view.

(Click to Enlarge)

(Click to Enlarge)

All subnet routes are accounted for, including the custom route that represents the Service VPC. Please check the other Transit Gateways for the Service VPC route.

(Click to Enlarge)

I ran traceroute from my laptop in my office. The packet is forwarded into the avx-uscentral-hagw, then to the Google VPC Routing table. The packet is routed globally and across the peering in the Google Network. Peering does not show up in a traceroute.

Verification - how about the peered VPC?

Does the peered VPC see all of routes from the Transit? What about regional affinity?

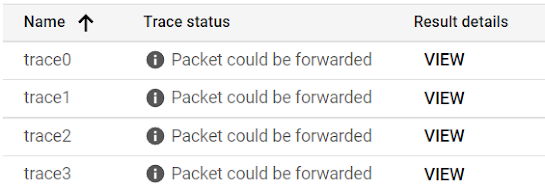

The only way I have found (so far) to prove this out from the Google API is to use Network Intelligence/Connectivity Center tests. This command appears to run a traceroute of sorts and is useful to see the computed next hops with dynamic routes. See the Into the weeds section at the bottom for some detail on the process.

I ran these connectivity tests against the VM from the previous section.

(Click to Enlarge)

The above tests are in the normal state with all Gateways up. The test returns 4 paths, corresponding to the 4 BGP sessions established. Priority is 0, for same region routes.

Since there are really only 2 real paths, the Aviatrix Transit Primary and HA, there are duplicates.

Verification - what if a pair of Aviatrix Transits is down?

Since my test VMs are in us-west1, I'll shut down the same region Transit.

I still see the 4 identical paths, but now traffic is routed via us-central1 with priority 236. My destination IP (192.168.2.3) is connected by a VPN in us-central1, so this routing makes sense.

Into the weeds

Initially, I wanted to prove the on-prem prefixes are learned into the Service VPC with route affinity by showing route tables.

Strangely, the same test on a VM instance (us-west1) in the apigee-ingress-global VPC doesn't show different priorities.

The Cloud Router documentation states that dynamic routes have a priority assigned based on routing distance. The gcloud compute routes list command states that it won't return dynamic routes, referring us instead to the gcloud compute routers get-status command. However, that command only runs agains a regional Cloud Router, not a VM Instance.

I moved on to the Network Intelligence/Connectivity Center tests to see if I could coax out some more information. I ran a test to a firewall mgmt interface (192.168.2.3) my on-prem lab for both the apigee-ingress-global VPC and the Service VPC test VMs.

In both cases, the tests succeeded and found 4 identical paths, save for the Peering hop on the Service VPC. The REST output returned more detail with the following route names:

- "displayName": "dynamic-route_17734467156087659059"

- "displayName": "dynamic-route_6076300278419112525"

- "displayName": "dynamic-route_11702578725044332292"

- "displayName": "dynamic-route_3878110521043239369"

The next hop is one of the two us-west1 Aviatrix Transit Gateways.

Conclusion: Google's general route and Cloud Router commands aren't appropriate for path testing. The Connectivity Center tests are an effective way to determine the paths in the present state.

Comments

Post a Comment