Solved with Aviatrix: Infoblox Anycast BGP on AWS/Azure

Infoblox Anycast BGP with Aviatrix

Matt Kazmar, Customer Solutions Architect

Infoblox is well known and used appliance for DNS, IPAM, and other functions. Like most appliances, there are versions for multiple Public Cloud providers.

Aviatrix is the leading vendor of Multicloud connectivity solutions.

"The Aviatrix cloud network platform delivers a single, common platform for multi-cloud networking, regardless of public cloud providers used. Aviatrix delivers the simplicity and automation enterprises expect in the cloud with the operational visibility and control they require."

Why do this?

DNS is a crucial requirement for any networking. Availability is a must, regardless of region. A DNS outage can cripple applications and business.

Infoblox provides a DNS solution (among other things) that provides robust replication and availability of DNS resources. In the on-prem world, their appliances peer with core switches and advertise the same IPs, regardless of location. This is Anycast with BGP. A client, server, device, anything can query a single pair of DNS IPs and receive a result from the closest Infoblox appliance.

In Cloud, a single set of corporate DNS server IPs drive consistency. When creating a VNET or VPC, simply specify those IPs. Or in the case of GCP, the same forwarders.

Why do this with Aviatrix?

Infoblox VMs in major clouds also support Anycast BGP. However, Cloud support of BGP is varied.

AWS: TGW Connect supports BGP over a GRE Tunnel to a Transit Gateway.

Azure: Route Server

GCP: Network Connectivity Center

Azure and GCP can work although configuration options are quite limited. I don't believe AWS TGW Connect/GRE Tunnel is supported on Infoblox. A 3rd party BGP solution is likely required.

Beyond BGP integration with the VPC or VNET, the native solutions have limitations with the route propagation to other VNETs or VPCs in the same Cloud, another Cloud, or to on-prem/colo. VPC Peering has transitivity limits. VPN has performance restrictions and its own interop headaches. Dedicated circuits such as ExpressRoute are expensive and require another third party to integrate.

Even if we solve for the above, there are consistency issues. Each Cloud will be defined a different way, requiring different tools and techniques. This increases your time to resolution, should there be a problem. This paragraph really can be applied to similar workloads and configuration in different Clouds.

Aviatrix platform provides solutions that compliment Infoblox's Anycast DNS solution. Aviatrix provides straightforward BGP configuration on multiple Public Cloud platforms and can provide high speed, encrypted connectivity between Clouds and to your colo or Datacenter.

Lab Environment

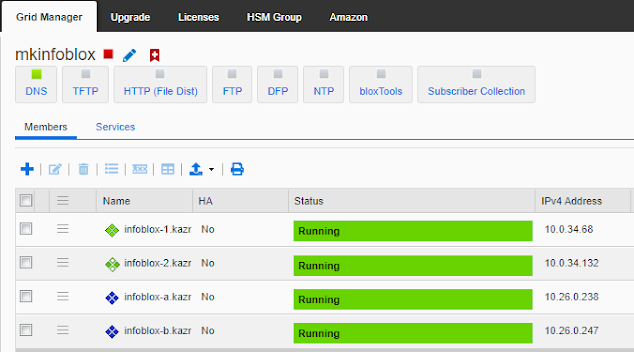

In my lab, I deployed Aviatrix Multicloud Transit across Azure, AWS, and GCP. Azure and AWS have a pair of Infoblox appliances each.

The following instructions assume a working knowledge of networking in the chosen Public Clouds.

Getting Started

- Deploy Aviatrix Controller - pick only one. We can also deploy in GCP and OCI.

- Add Accounts for access to Public Clouds:

- Add VPCs/VNETs - use CSP tools or our tool below:

- Create a VPC — aviatrix_docs documentation

- Each Transit needs a VPC or VNET.

- Azure's Infoblox deployments need to be in their own VNET.

- Create a single Spoke VPC for testing purposes.

- Deploy Transit Gateways and necessary Spoke Gateways.

- Multi-cloud Transit Network Workflow Instructions (AWS/Azure/GCP/OCI) — aviatrix_docs documentation

- Important: For Azure, be sure to check the BGP over LAN option.

- Important: For AWS, pick size c5n.4xlarge.

- Choose defaults otherwise.

- Enable HA for the Transit.

- Create a Spoke Gateway for the Testing Spoke VPC and attach it. Or, setup a VPN to your on-prem.

- Under Multi-cloud Transit Advanced Config:

- Assign a unique ASN

- AWS only: Enable Connected Transit

- AWS only: Enable Transit Advertised CIDRs

- Enable Transit Peering between the newly deployed Transit Gateways.

- Attach the Anycast VNET for Infoblox to the Azure Transit using Native Spoke Network Attachment.

- Optional: Deploy your Jumpbox in the Spoke VPC. Pick your poison.

Deploy Infoblox

- Deployments have both LAN1 (eth0) and Mgmt (eth1) interfaces. Infoblox appliances seem to disallow use of the Mgmt interface, so ignore it.

- For the Security Group/NSG, ensure that the Infoblox subnets can communicate with each other and that the HTTPS/SSH management is accessible from your workstation.

- Official document: Azure

- Each LAN1 NIC must be in a different subnet for a fully supported scenario.

- Official document: AWS

- Each LAN1 NIC must be in a different subnet for a fully supported scenario.

- Collect the LAN1 IPs for each Infoblox VM.

- All Infoblox VMs should be reachable now on the internal IPs of the LAN interfaces.

- Run ping tests and curl the web interface from the Jumpbox in the spoke.

Configure Aviatrix for BGP over LAN

- AWS instructions

- For AWS, order of operations is important. The Transit Gateway BGP over LAN is not generated until the peering is enabled.

- Be sure to collect the BGP over LAN IP. It is the interface suffixed with _eth4.

- Azure instructions

- Aviatrix requires that BGP over LAN occurs over a peering. This peering is under Remote VNet Name.

- Attaching the VNET with the Native Spoke Network Attachment, as in Getting Started, Step #7 accomplishes this task.

- Collect the BGP IPs in the screen - 10.0.36.36 and 10.0.36.44 in this case.

- Under Multi-cloud Transit Advanced Config, select each Transit Gateway that was configured with BGP. Set as below.

- Infoblox will only accept a default route over BGP. This is confirmed with support and within the CLI.

Configure Infoblox

- I configured all VMs to be on the same grid.

- I created a test zone and replicated to all devices.

- Enable Anycast

- Per the diagram, I used two Anycast IPs and assigned them to a pair of Infoblox appliances in each Cloud.

- Configure BGP and specify the neighbor as the eth1 IP of the corresponding Transit Gateway.

- Infoblox #1 -> Transit Gateway Primary

- Infoblox #2 -> Transit Gateway HA

- Note: If Firenet is enabled, the interface could be eth2 or eth3.

- Be sure to follow Step 3 and enable the Anycast IP to respond to DNS queries.

- If all is well, we will see the BGP peerings as up on the Controller.

- If the peerings don't come up, restart the Infoblox instances. Adding the Anycast IPs requires a service restart but it doesn't always prompt.

Routing in Cloud is Fun

While BGP is up, actual traffic is unlikely to work. I ran into an issue on each cloud.

Azure:

- When attaching an Azure VNET as a Native Spoke, route tables are created with RFC entries that point to the eth0 interface IP.

- Since Aviatrix uses the Weak Host Model, the routing isn't strictly the issue. eth0 has an NSG that only allows the VirtualNetwork service tag. This will block traffic from the Anycast IP.

- While you might be tempted to simply update the route tables, don't bother. On a reboot of the Transit Gateway, they will be rewritten.

- The solution is to add the Anycast IPs to the NSG for each Transit Gateway's eth0.

- Src/dst checking must be set to false for each LAN1 ENI.

Proof

These Anycast paths are the same length from my on-prem.

Path with AWS down:

Path with Azure down:DNS resolution, regardless of path:

Conclusion

Aviatrix makes this design very straight forward in both AWS and Azure. Note how similar the design patterns are - there are only some minor differences due to the Cloud Provider.

Not touched on here is the fact that all of the Aviatrix (and Cloud) work can be automated with Terraform. Aviatrix CoPilot delivers full visibility to the DNS queries and the rest of the traffic on the network. We can insert firewalls to protect the DNS traffic or segment it for only certain Spoke VPCs or connections.

Visit the Aviatrix website and get a sales call together. Let's see how easy we can make your Cloud networking journey.

Comments

Post a Comment